Blue-Green Deployments for Serverless Powered Applications on AWS

Oct 20, 2016 ~ 7 min read

First described by Martin Fowler back in 2010, blue-green deployment is a release technique that reduces downtime and risk by running two identical production environments called Blue and Green.

Fast-forwarding to 2013, Danilo Sato from ThoughtWorks published on their blog a very insightful article that describes how to implement blue-green deployments using AWS. We, at Mitoc Group, are working primarily with serverless computing from AWS, and today we'd like to share our experience using blue-green deployment process for serverless powered applications.

Note: This blogpost uses intentionally screenshots from AWS Management Console to outline a Do-It-Yourself point of view, but we'll also provide (wherever possible) the equivalent devops command or tool, to make sure that more advanced audience is NOT bored to death :)

The key points to keep in mind as we move forward:

- We use full stack approach to build web applications using serverless computing from AWS (not just AWS Lambda and API Gateway)

- We enforce security in every layer and least privilege access (e.g. front-end tier, back-end tier, data tier, as well as centralized monitoring)

- We apply the same approach to newly built applications, as well as newly cloud-migrated applications that are compatible with microservices architecture (we call them cloud-native applications)

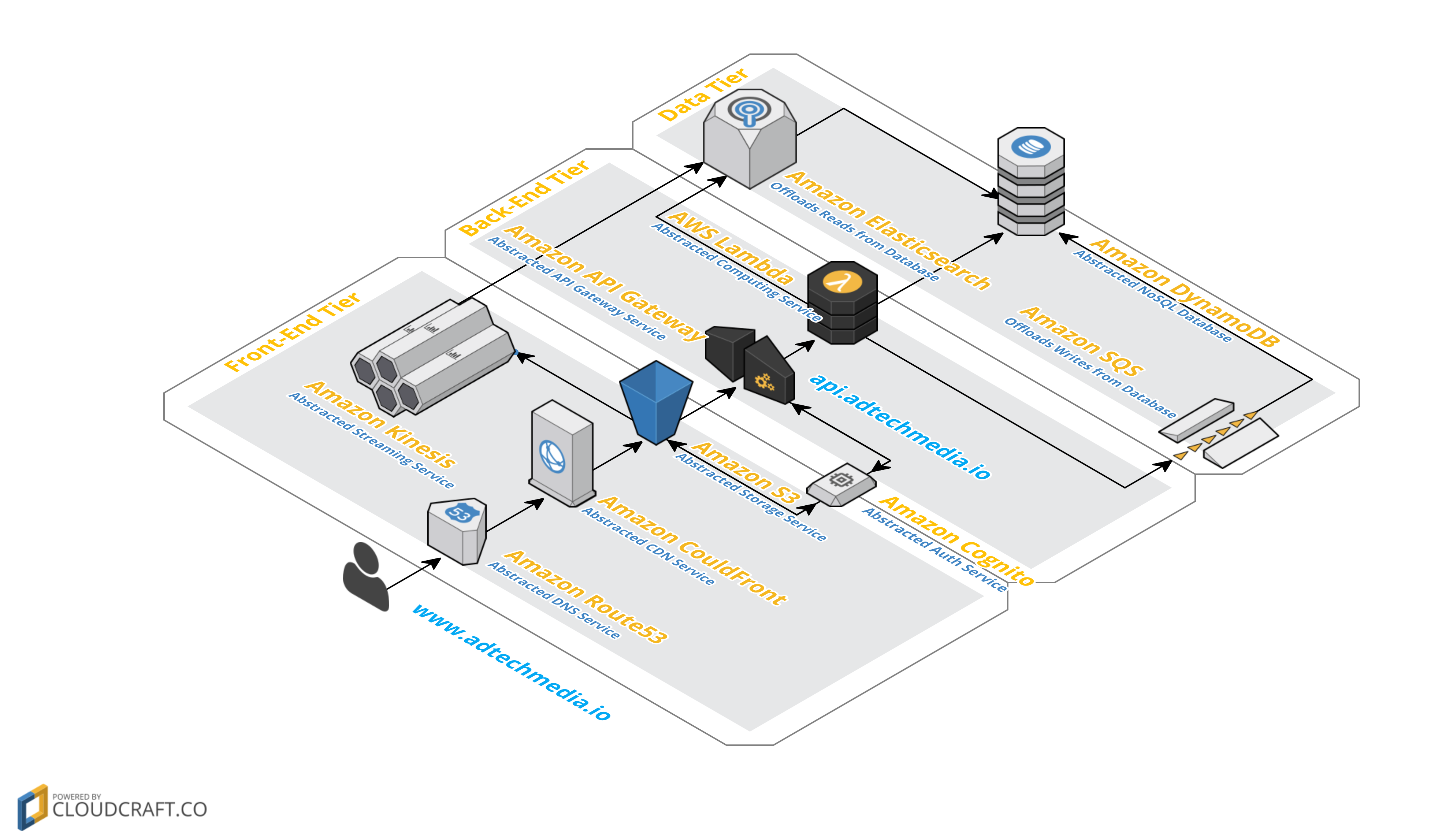

Serverless Architecture on AWS

Before we dive into the details of the blue-green deployment process for serverless powered applications, it's vital to point out the architecture of a typical web application that uses serverless computing from AWS (as shown in the picture below, as well as described in this blogpost).

Here below is the summarized list of AWS products we use:

- Security tier: 1) AWS IAM and 2) Amazon Cognito

- Front-end tier: 3) Amazon Route53, 4) Amazon CloudFront and 5) Amazon S3

- Back-end tier: 6) Amazon API Gateway, 7) AWS Lambda and 8) Amazon SNS

- Data tier: 9) Amazon DynamoDB, 10) Amazon SQS, 11) Amazon ElastiCache and 12) Amazon Elasticsearch Service

- Monitoring tier: 13) Amazon CloudWatch, 14) AWS CloudTrail and 12) Amazon Elasticsearch Service

Note: As you can see above, a typical web application in our case uses 14 different services from AWS. Also, Amazon CloudSearch is a much better fit as a serverless option for full-text search capabilities, but we prefer Elasticsearch technology and Amazon Elasticsearch Service instead

Pre-requisites and Initial Considerations

The blue-green deployment for serverless powered applications is happening entirely on the front-end tier, mainly because all other resources from back-end, data, monitoring and security tiers are duplicated and therefore are NOT altered during this process. So, going forward, we'll be describing only the changes that will be applied to Amazon Route53, Amazon CloudFront and Amazon S3 during any serverless blue-green deployment.

The simplest and most straight-forward approach to blue-green deployments for serverless powered applications is to switch all traffic from blue environment to green environment on DNS level (and in case of failures, rollback from green environment to blue environment).

Managing DNS records can be sometimes very tricky, mainly because the propagation might take unpredictable time due to various caching layers on Internet. But our experience with Amazon Route53 is amazing, as long as we are using A alias records instead of CNAME records. Here below are 3 screenshots from AWS Management Console that shows how we've setup www1.adtechmedia.io in Amazon Route53, Amazon CloudFront and Amazon S3:

Blue/Green Deployments v1

At this point, we are ready to switch from blue environment to green environment with zero downtime and low risks. The switch is quite simple:

Step 1: Update CloudFront distribution for blue environment by removing www1.adtechmedia.io from Alternative Domain Names (CNAMEs)

Step 2: Update CloudFront distribution for green environment by adding www1.adtechmedia.io to Alternative Domain Names (CNAMEs)

Step 3: Update Route53 A alias record with CloudFront distribution Domain Name from green environment

If, for some unexpected reason, your green environment starts generating high level of failures, the rollback process is pretty similar to the one described above:

- Remove CNAME from green environment

- Add CNAME to blue environment

- Update Amazon Route53 with blue environment Domain Name

UPDATE on 11/03/2016: A friend pointed out that it's not necessarily to add/remove CNAMEs (which could take up to 20 minutes to propagate). Instead, just leave blue environment as it is (e.g. www1.adtechmedia.io) and setup wild carded CNAME on green environment (e.g. *.adtechmedia.io). When both distributions are enabled, blue will take precedence over green, making sure you're not stuck with new deploy in case of high level of failures.

Blue/Green Deployments v2

As you have seen in the previous blue-green deployments process the traffic between environments is switched suddenly, at 100% capacity. This is great for zero downtime, but if your application starts to fail, all of your users are affected. Some modern continuous deployment technics promote a more gradual switch of the traffic between environments. For example, we push only 5% of requests to green environment, while 95% still goes to blue environment. This allows to detect production problems early and on a much smaller audience of users, problems that have never surfaced in testing and staging phases. Is it possible to enable such an approach for serverless powered applications?

Short answer, yes! We're very excited and humble to be able to explain our serverless solution, but there are some additional pre-requisites that must happen before. Let's describe the challenge first, and then jump into our implementation and pre-requisites.

The Challenge

Amazon CloudFront, the way it is designed, doesn't allow same CNAME on multiple distributions. That is also the reason why we're removing it from the blue environment and adding it to the green environment in our previous implementation.

Our implementation

Amazon Route53 allows weighted routing of the traffic across multiple Amazon CloudFront distributions, Amazon S3 static websites and other endpoints. So, instead of load balancing requests between distributions, we are changing current A alias record that points to blue environment from simple routing to weighted routing, and add another A alias record as green environment that points directly to Amazon S3 static website endpoint. This enables us to manipulate requests across environments as we wish: 95% vs 5% (as shown in the screenshot below), then (if everything is fine) 90% vs 10%, and so on until blue is 0% and green is 100%.

All changes are made on Amazon Route53 level, without altering Amazon CloudFront or Amazon S3 resources. And compared to previous blue-green deployment, rollback process is even faster and easier. We remove A alias record of green environment and we're done! Well, almost done… For consistency and cost saving purposes, we also revert back A alias record of blue environment from weighted routing to simple routing.

Final Thoughts and Conclusion

What are the down sizes (pre-requisites) of the blue-green deployments v2?

- Amazon S3 static website hosting doesn't support SSL, so we find ourselves temporarily enforcing HTTP-only during blue-green deployment

- Amazon S3 static endpoint can be used with Amazon Route53 A alias only if the bucket name is the same with the domain name (e.g. www.adtechmedia.io)

- Depends on the traffic size, specifically how much TPS you're consuming, Amazon S3 might start throttling you (more details here: Request Rate and Performance Considerations)

Unfortunately, there is no silver bullet that would work perfectly for any serverless powered applications on AWS. As with any software, it's up to us (developers or devops engineers) to decide the right process that fits specific use case. We just wanted to share two different approaches that empowered us to provide high quality at scale without compromising on resources and costs (which, by the way, are ridiculously low, but that's another blogpost).

Proud to announce that #AWSLambda team decided to feature @MitocGroup as framework partners. Thank you, @awscloud ! https://t.co/VzGkMefIZD

— Mitoc Group (@MitocGroup) February 16, 2016

Last, but not the least, Mitoc Group is a technology company that focuses on innovative enterprise solutions. Share your thoughts and your experience on LinkedIn, Twitter or Facebook.